An Example iOS 6 iPad Touch, Multitouch and Tap Application

| Previous | Table of Contents | Next |

| An Overview of iOS 6 iPad Multitouch, Taps and Gestures | Detecting iOS 6 iPad Touch Screen Gesture Motions |

Learn SwiftUI and take your iOS Development to the Next Level |

Having covered the basic concepts behind the handling of iOS user interaction with an iPad touch screen in the previous chapter, this chapter will work through a tutorial designed to highlight the handling of taps and touches. Topics covered in this chapter include the detection of single and multiple taps and touches, identifying whether a user single or double tapped the device display and extracting information about a touch or tap from the corresponding event object.

The Example iOS 6 iPad Tap and Touch Application

The example application created in the course of this tutorial will consist of a view and some labels. The view object’s view controller will implement a number of the touch screen event methods outlined in An Overview of iOS 6 iPad Multitouch, Taps and Gestures and update the status labels to reflect the detected activity. The application will, for example, report the number of fingers touching the screen, the number of taps performed and the most recent touch event that was triggered. In the next chapter, entitled Detecting iOS 6 iPad Touch Screen Gesture Motions we will look more closely at detecting the motion of touches.

Creating the Example iOS Touch Project

Begin by launching the Xcode development environment and selecting the option to create a new project. Select the iOS Application Single View Application template and the iPad device option and name the project and class prefix Touch with Storyboard support and Automatic Reference Counting enabled. When the main Xcode project screen appears we are ready to start writing the code for our application.

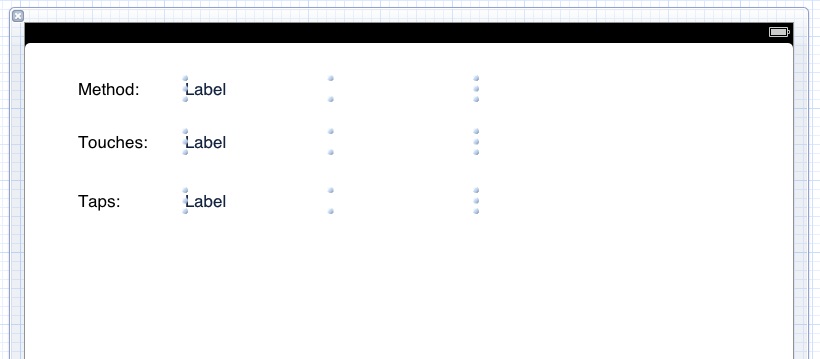

Designing the User Interface

Load the storyboard by selecting the MainStoryboard.storyboard file. Using Interface Builder, modify the user interface by adding label components from the Object library (View -> Utilities -> Show Object Library) and modifying properties until the view appears as outlined in Figure 47-1.

When adding the labels to the right of the view, be sure to stretch them so that they reach beyond the center of the view area.

Figure 47-1

Select label to the right of the “Method:” label, display the Assistant Editor panel and verify that the editor is displaying the contents of the TouchViewController.h file. Ctrl-click on the same label object and drag to a position just below the @interface line in the Assistant Editor. Release the line and in the resulting connection dialog establish an outlet connection named methodStatus.

Repeat the above steps to establish outlet connections for the remaining labels object to properties named touchStatus and tapStatus.

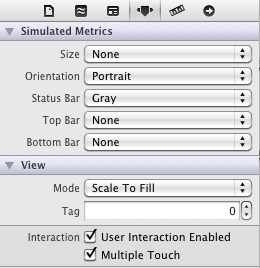

Enabling Multitouch on the View

By default, views are configured to respond to only single touches (in other words a single finger touching or tapping the screen at any one time). For the purposes of this example we plan to detect multiple touches. In order to enable this support it is necessary to change an attribute of the view object. To achieve this, click on the background of the View window, display the Attribute Inspector (View -> Utilities -> Show Attribute Inspector) and make sure that the Multiple Touch option is selected in the Interaction section at the bottom of the window:

Figure 47-2

Implementing the touchesBegan Method

When the user touches the screen, the touchesBegan method of the first responder is called. In order to capture these event types, we need to implement this method in our view controller. In the Xcode project navigator, select the TouchViewController.m file and add the touchesBegan method as follows:

- (void) touchesBegan:(NSSet *)touches

withEvent:(UIEvent *)event {

NSUInteger touchCount = [touches count];

NSUInteger tapCount = [[touches anyObject] tapCount];

_methodStatus.text = @"touchesBegan";

_touchStatus.text = [NSString stringWithFormat:

@"%d touches", touchCount];

_tapStatus.text = [NSString stringWithFormat:

@"%d taps", tapCount];

}

This method obtains a count of the number of touch objects contained in the touches set (essentially the number of fingers touching the screen) and assigns it to a variable. It then gets the tap count from one of the touch objects. The code then updates the methodStatus label to indicate that the touchesBegan method has been triggered, constructs a string indicating the number of touches and taps detected and displays the information on the touchStatus and tapStatus labels accordingly.

Implementing the touchesMoved Method

When the user moves one or more fingers currently in contact with the surface of the iPad touch screen, the touchesMoved method is called repeatedly until the movement ceases. In order to capture these events it is necessary to implement the touchesMoved method in our view controller class:

- (void) touchesMoved:(NSSet *)touches

withEvent:(UIEvent *)event {

NSUInteger touchCount = [touches count];

NSUInteger tapCount = [[touches anyObject] tapCount];

_methodStatus.text = @"touchesMoved";

_touchStatus.text = [NSString stringWithFormat:

@"%d touches", touchCount];

_tapStatus.text = [NSString stringWithFormat:

@"%d taps", tapCount];

}

Once again we report the number of touches and taps detected and indicate to the user that this time the touchesMoved method is being triggered.

Implementing the touchesEnded Method

When the user removes a finger from the screen the touchesEnded method is called. We can, therefore, implement this method as follows:

- (void) touchesEnded:(NSSet *)touches

withEvent:(UIEvent *)event {

NSUInteger touchCount = [touches count];

NSUInteger tapCount = [[touches anyObject] tapCount];

_methodStatus.text = @"touchesEnded";

_touchStatus.text = [NSString stringWithFormat:

@"%d touches", touchCount];

_tapStatus.text = [NSString stringWithFormat:

@"%d taps", tapCount];

}

Getting the Coordinates of a Touch

Although not part of this particular example, it is worth knowing that the coordinates of the location on the screen where a touch has been detected may be obtained in the form of a CGPoint structure by calling the locationInView method of the touch object. For example:

UITouch *touch = [touches anyObject]; CGPoint point = [touch locationInView:self.view];

The X and Y coordinates may subsequently be extracted from the CGPoint structure by accessing the corresponding elements:

CGFloat pointX = point.x; CGFloat pointY = point.y;

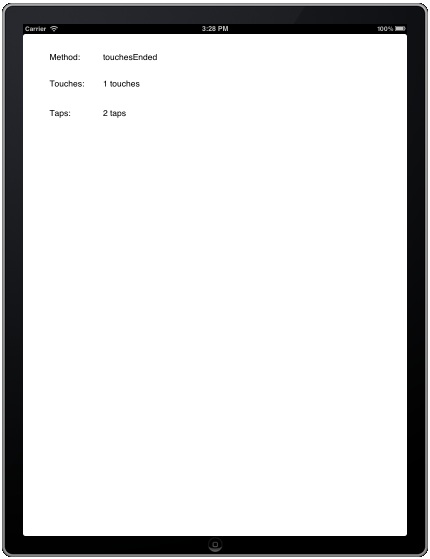

Building and Running the Touch Example Application

Build and run the application by clicking on the Run button located in the toolbar of the main Xcode project window. The application will run in the iOS Simulator where you should be able to click with the mouse pointer to simulate touches and taps. With each click, the status labels should update to reflect the interaction:

Figure 47-3

Of course, since a mouse only has one pointer it is not possible to trigger multiple touch events using the iOS Simulator environment. In fact, the only way to try out multitouch behavior in this application is to run it on a physical iPad device. For steps on how to achieve this, refer to the chapter entitled Testing iOS 6 Apps on the iPad – Developer Certificates and Provisioning Profiles.

Learn SwiftUI and take your iOS Development to the Next Level |

| Previous | Table of Contents | Next |

| An Overview of iOS 6 iPad Multitouch, Taps and Gestures | Detecting iOS 6 iPad Touch Screen Gesture Motions |