An Introduction to SiriKit

Learn SwiftUI and take your iOS Development to the Next Level |

Although Siri has been part of iOS for a number of years, it was not until the introduction of iOS 10 that some of the power of Siri has been made available to app developers through SiriKit. Though far from providing full access to the capabilities of Siri, SiriKit does allow certain areas of application functionality to be initiated through the Siri interface. An app designed to send messages, for example, may be integrated into Siri to allow messages to be composed and sent using voice commands. Similarly, a time management app might use SiriKit to allow entries to be made in the Reminders app.

This chapter will provide an overview of SiriKit and outline the ways in which apps are configured to integrate SiriKit support. The next chapter, entitled An iOS 11 Example SiriKit Messaging Extension will provide a walk-through of an existing messaging app example integrated with SiriKit. Following on from there, the chapter entitled An iOS 11 SiriKit Photo Search Tutorial will create a new project that uses SiriKit to allow Siri-based photo searches to be performed on iOS devices.

Siri and SiriKit

Most iOS users will no doubt be familiar with Siri, Apple’s virtual digital assistant. Pressing and holding the home button, or saying “Hey Siri” launches Siri and allows a range of tasks to be performed by speaking in a conversational manner. Selecting the playback of a favorite song, asking for turn-by-turn directions to a location or requesting information about the weather are all examples of tasks that Siri can perform in response to voice commands.

With the introduction of SiriKit in iOS 10, some of the capabilities of Siri are now available to iOS app developers.

When an app integrates with SiriKit, Siri handles all of the tasks associated with communicating with the user and interpreting the meaning and context of the user’s words. Siri then packages up the user’s request into an intent and passes it to the iOS app. It is then the responsibility of the iOS app to verify that enough information has been provided in the intent to perform the task and to instruct Siri to request any missing information. Once the intent contains all of the necessary data, the app performs the requested task and notifies Siri of the results. These results will be presented either by Siri or within the iOS app itself.

SiriKit Domains

SiriKit can only be used with apps to perform tasks that fit into narrowly defined categories, also referred to as domains. With the release of iOS 10, Siri can only be used by apps when performing tasks that fit into one or more of the following domains:

• Messaging • Notes and Lists • Payments • Visual Codes • Photos • Workouts • Ride Booking • CarPlay • Car Commands • VoIP Calling • Restaurant Reservations

SiriKit Intents

Each domain allows a predefined set of tasks, or intents, to be requested by the user for fulfillment by an app. An intent represents a specific task of which Siri is aware and for which SiriKit expects an integrated iOS app to be able to perform. The Messaging domain, for example, includes intents for sending and searching for messages, while the Workout domain contains intents for choosing, starting and finishing workouts. When the user makes a request of an app via Siri, the request is placed into an intent object of the corresponding type and passed to the app for handling.

How SiriKit Integration Works

Siri integration is performed via the iOS extension mechanism (a topic covered in detail starting with the chapter entitled An Introduction to Extensions in iOS 11). Extensions are added as targets to the app project within Xcode in the same way as other extension types. SiriKit provides two types of extension, the key one being the Intents Extension. This extension contains an intent handler which is subclassed from the INExtension class of the Intents framework and contains the methods called by Siri during the process of communicating with the user. It is the responsibility of the intent handler to verify that Siri has collected all of the required information from the user, and then to execute the task defined in the intent.

The second extension type is the UI Extension. This extension is optional and comprises a storyboard file and a subclass of the IntentViewController class. When provided, Siri will use this UI when presenting information to the user. This can be useful for including additional information within the Siri user interface or for bringing the branding and theme of the main iOS app into the Siri environment.

When the user makes a request of an app via Siri, the first method to be called is the handler(forIntent:) method of the intent handler class contained in the Intents Extension. This method is passed the current intent object and returns a reference to the object that will serve as the intent handler. This can either be the intent handler class itself or another class that has been configured to implement one or more intent handling protocols.

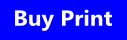

The intent handler declares the types of intent it is able to handle and must then implement all of the protocol methods required to support those particular intent types. These methods are then called as part of a sequence of phases that make up the intent handling process as illustrated in Figure 95 1:

Figure 95-1

The first step after Siri calls the handler method involves calls to a series of methods to resolve the parameters associated with the intent.

Learn SwiftUI and take your iOS Development to the Next Level |

Resolving Intent Parameters

Each intent type has associated with it a group of parameters that are used to provide details about the task to be performed by the app. While many parameters are mandatory, some are optional. The intent to send a message must, for example, contain a valid recipient parameter in order for a message to be sent. A number of parameters for a Photo search intent, on the other hand, are optional. A user might, for example, want to search for photos containing particular people, regardless of the date that the photos were taken.

Siri knows all of the possible parameters for each intent type, and for each parameter Siri will ask the app extension’s intent handler to resolve the parameter via a corresponding method call. If Siri already has a parameter, it will ask the intent handler to verify that the parameter is valid. If Siri does not yet have a value for a parameter it will ask the intent handler if the parameter is required. If the intent handler notifies Siri that the parameter is not required, Siri will not ask the user to provide it. If, on the other hand, the parameter is needed, Siri will ask the user to provide the information.

Consider, for example, a photo search app called CityPicSearch that displays all the photos taken in a particular city. The user might begin by saying the following: “Hey Siri. Find photos using CityPicSearch.”

From this sentence, Siri will infer that a photo search using the CityPicSearch app has been requested. Siri will know that CityPicSearch has been integrated with SiriKit and that the app has registered that it supports the InSearchForPhotosIntent intent type. Siri also knows that the InSearchForPhotosIntent intent allows photos to be searched for based on date created, people in the photo, the location of the photo and the photo album in which the photo resides. What Siri does not know, however, is which of these parameters the CityPicSearch app actually needs to perform the task. To find out this information, Siri will call the resolve method for each of these parameters on the app’s intent handler. In each case the intent handler will respond indicating whether or not the parameter is required. In this case, the intent handler’s resolveLocationCreated method will return a status indicating that the parameter is mandatory. On receiving this notification, Siri will request the missing information from the user by saying: “Find pictures from where?”

The user will then provide a location which Siri will pass to the app by calling resolveLocationCreated once again, including the selection in the intent object. The app will verify the validity of the location and indicate to Siri that the parameter is valid. This process will repeat for each parameter supported by the intent type until all necessary parameter requirements have been satisfied.

Techniques are also available to assist Siri and the user clarify ambiguous parameters. The intent handler can, for example, return a list of possible options for a parameter which will then be presented to the user for selection. If the user were to ask an app to send a message to “John”, the resolveRecipients method would be called by Siri. The method might perform a search of the contacts list and find multiple entries where the contact’s first name is John. In this situation the method could return a list of contacts with the first name of John. Siri would then ask the user to clarify which “John” is the intended recipient by presenting the list of matching contacts.

Once the parameters have either been resolved or indicated as not being required, Siri will call the confirm method of the intent handler.

The Confirm Method

The confirm method is implemented within the extension intent handler and is called by Siri when all of the intent parameters have been resolved. This method provides the intent handler with an opportunity to make sure that it is ready to handle the intent. If the confirm method reports a ready status, Siri calls the handle method.

The Handle Method

The handle method is where the activity associated with the intent is performed. Once the task is completed, a response is passed to Siri. The form of the response will depend on the type of activity performed. For example, a photo search activity will return a count of the number of matching photos, while a send message activity will indicate whether the message was sent successfully.

The handle method may also return a continueInApp response. This tells Siri that the remainder of the task is to be performed within the main app. On receiving this response, Siri will launch the app, passing in an NSUserActivity object. NSUserActivity is a class that enables the status of an app to be saved and restored. In iOS 10 and later, the NSUserActivity class now has an additional property that allows an NSInteraction object to be stored along with the app state. Siri uses this interaction property to store the NSInteraction object for the session and pass it to the main iOS app. The interaction object, in turn, contains a copy of the intent object which the app can extract to continue processing the activity. A custom NSUserActivity object can be created by the extension and passed to the iOS app. Alternatively, if no custom object is specified, SiriKit will create one by default.

A photo search intent, for example, would need to use the continueInApp response and user activity object so that photos found during the search can be presented to the user (SiriKit does not currently provide a mechanism for displaying the images from a photo search intent within the Siri user interface).

It is important to note that an intent handler class may contain more than one handle method to handle different intent types. A messaging app, for example, would typically have different handler methods for send message and message search intents.

Learn SwiftUI and take your iOS Development to the Next Level |

Custom Vocabulary

Clearly Siri has a broad knowledge of vocabulary in a wide range of languages. It is quite possible, however, that your app or app users might use certain words or terms which have no meaning or context for Siri. These terms can be added to your app so that they are recognized by Siri. These custom vocabulary terms are categorized as either user-specific or global.

User specific terms are terms that only apply to an individual user. This might be a photo album with an unusual name or the nicknames the user has entered for contacts in a messaging app. User specific terms are registered with Siri from within the main iOS app (not the extension) at application runtime using the setVocabularyStrings(oftype:) method of the NSVocabulary class and must be provided in the form of an ordered list with the most commonly used terms listed first.

User-specific custom vocabulary terms may only be specified for contact and contact group names, photo tag and album names, workout names and CarPlay car profile names. When calling the setVocabularyStrings(oftype:) method with the ordered list, the category type specified must be one of the following:

- contactName

- contactGroupName

- photoTag

- photoAlbumName

- workoutActivityName

- carProfileName

Global vocabulary terms are specific to your app but apply to all app users. These terms are supplied with the app bundle in the form of a property list file named AppInventoryVocabulary.plist. These terms are only applicable to workout and ride sharing names.

The Siri User Interface

Each SiriKit domain has a standard user interface layout that is used by default to convey information to the user during the Siri integration. The Ride Booking extension, for example, will display information such as the destination and price. These default user interfaces can be customized by adding an intent UI app extension to the project. This topic is covered in the chapter entitled Customizing the SiriKit Intent User Interface.

Summary

SiriKit brings some of the power of Siri to third-party apps, allowing the functionality of an app to be accessed by the user using the Siri virtual assistant interface. Siri integration is currently only available when performing tasks that fall into narrowly defined domains such as messaging, photo searching and workouts. Siri integration uses the standard iOS extensions mechanism. The Intents Extension is responsible for interacting with Siri, while the optional UI Extension provides a way to control the appearance of any results presented to the user within the Siri environment.

All of the interaction with the user is handled by Siri, with the results structured and packaged into an intent. This intent is then passed to the intent handler of the Intents Extension via a series of method calls designed to verify that all the required information has been gathered. The intent is then handled, the requested task performed and the results presented to the user either via Siri or the main iOS app.

Learn SwiftUI and take your iOS Development to the Next Level |