Difference between revisions of "An iOS 10 Real-Time/Live Speech Recognition Tutorial"

(→Designing the User Interface) |

|||

| Line 21: | Line 21: | ||

== Designing the User Interface == | == Designing the User Interface == | ||

| − | + | <htmlet>adsdaqbox_flow</htmlet> | |

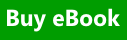

Select the Main.storyboard file, add two Buttons and a Text View component to the scene and configure and position these views so that the layout appears as illustrated in Figure 99-1 below: | Select the Main.storyboard file, add two Buttons and a Text View component to the scene and configure and position these views so that the layout appears as illustrated in Figure 99-1 below: | ||

Latest revision as of 04:44, 10 November 2016

| Previous | Table of Contents | Next |

| Playing Audio on iOS 10 using AVAudioPlayer | An Introduction to SiriKit |

Learn SwiftUI and take your iOS Development to the Next Level |

The previous chapter, entitled An iOS 10 Speech Recognition Tutorial, introduced the Speech framework and the speech recognition capabilities that are now available to app developers with the introduction of the iOS 10 SDK. The chapter also provided a tutorial demonstrating the use of the Speech framework to transcribe a pre-recorded audio file into text.

This chapter will build on this knowledge to create an example project that uses the speech recognition Speech framework to transcribe speech in near real-time.

Creating the Project

Begin by launching Xcode and creating a new Universal single view-based application named LiveSpeech using the Swift programming language.

Designing the User Interface

Figure 99-1

Display the Resolve Auto Layout Issues menu and select the Reset to Suggested Constraints option listed under All Views in View Container, then select the Text View object, display the Attributes Inspector panel and remove the sample Latin text.

Display the Assistant Editor panel and establish outlet connections for the Buttons named transcribeButton and stopButton respectively. Repeat this process to connect an outlet for the Text View named myTextView. With the Assistant Editor panel still visible, establish action connections from the Buttons to methods named startTranscribing and stopTranscribing.

Adding the Speech Recognition Permission

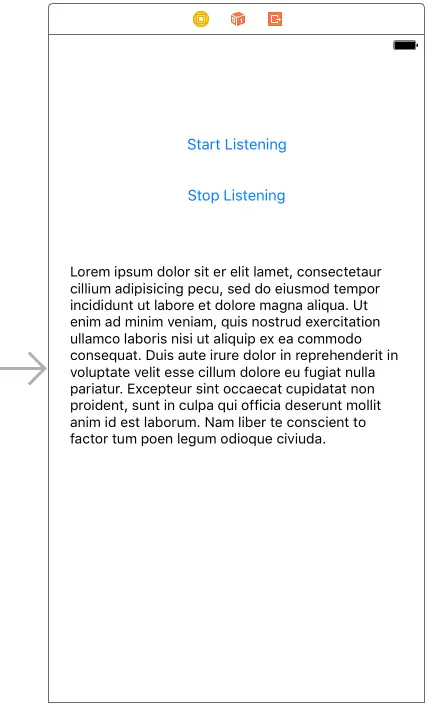

Select the Info.plist file, locate the bottom entry in the list of properties and hover the mouse pointer over the item. When the plus button appears, click on it to add a new entry to the list. From within the dropdown list of available keys, locate and select the Privacy – Speech Recognition Usage Description option as shown in Figure 99-2:

Figure 99-2

Within the value field for the property, enter a message to display to the user when requesting permission to use speech recognition. For example:

Speech recognition services are used by this app to convert speech to text.

Repeat this step to add a Privacy – Microphone Usage Description entry.

Learn SwiftUI and take your iOS Development to the Next Level |

Requesting Speech Recognition Authorization

The code to request speech recognition authorization is the same as that for the previous chapter. For the purposes of this example, the code to perform this task will, once again, be added as a method named authorizeSR within the ViewController.swift file as follows, remembering to import the Speech framework:

import Speech

.

.

.

func authorizeSR() {

SFSpeechRecognizer.requestAuthorization { authStatus in

OperationQueue.main.addOperation {

switch authStatus {

case .authorized:

self.transcribeButton.isEnabled = true

case .denied:

self.transcribeButton.isEnabled = false

self.transcribeButton.setTitle("Speech recognition access denied by user", for: .disabled)

case .restricted:

self.transcribeButton.isEnabled = false

self.transcribeButton.setTitle("Speech recognition restricted on device", for: .disabled)

case .notDetermined:

self.transcribeButton.isEnabled = false

self.transcribeButton.setTitle("Speech recognition not authorized", for: .disabled)

}

}

}

}

Remaining in the ViewController.swift file, locate and modify the viewDidLoad method to call the authorizeSR method:

Learn SwiftUI and take your iOS Development to the Next Level |

override func viewDidLoad() {

super.viewDidLoad()

authorizeSR()

}

Declaring and Initializing the Speech and Audio Objects

In order to be able to transcribe speech in real-time, the app is going to require instances of the SFSpeechRecognizer, SFSpeechAudioBufferRecognitionRequest and SFSpeechRecognitionTask classes. In addition to these speech recognition objects, the code will also need an AVAudioEngine instance to stream the audio into an audio buffer for transcription. Edit the ViewController.swift file and declare constants and variables to store these instances as follows:

import UIKit

import Speech

class ViewController: UIViewController {

@IBOutlet weak var transcribeButton: UIButton!

@IBOutlet weak var stopButton: UIButton!

@IBOutlet weak var myTextView: UITextView!

private let speechRecognizer = SFSpeechRecognizer(locale:

Locale(identifier: "en-US"))!

private var speechRecognitionRequest:

SFSpeechAudioBufferRecognitionRequest?

private var speechRecognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

.

.

.

Learn SwiftUI and take your iOS Development to the Next Level |

Starting the Transcription

The first task in initiating speech recognition is to add some code to the startTranscribing action method. Since a number of method calls that will be made to perform speech recognition have the potential to throw exceptions, a second method with the throws keyword needs to be called by the action method to perform the actual work (adding the throws keyword to the startTranscribing method will cause a crash at runtime because action methods signatures are not recognized as throwing exceptions). Within the ViewController.swift file, modify the startTranscribing action method and add a new method named startSession:

.

.

.

@IBAction func startTranscribing(_ sender: AnyObject) {

transcribeButton.isEnabled = false

stopButton.isEnabled = true

try! startSession()

}

func startSession() throws {

if let recognitionTask = speechRecognitionTask {

recognitionTask.cancel()

self.speechRecognitionTask = nil

}

let audioSession = AVAudioSession.sharedInstance()

try audioSession.setCategory(AVAudioSessionCategoryRecord)

speechRecognitionRequest = SFSpeechAudioBufferRecognitionRequest()

guard let recognitionRequest = speechRecognitionRequest else { fatalError("SFSpeechAudioBufferRecognitionRequest object creation failed") }

guard let inputNode = audioEngine.inputNode else { fatalError("Audio engine has no input node") }

recognitionRequest.shouldReportPartialResults = true

speechRecognitionTask = speechRecognizer.recognitionTask(with: recognitionRequest) { result, error in

var finished = false

if let result = result {

self.myTextView.text =

result.bestTranscription.formattedString

finished = result.isFinal

}

if error != nil || finished {

self.audioEngine.stop()

inputNode.removeTap(onBus: 0)

self.speechRecognitionRequest = nil

self.speechRecognitionTask = nil

self.transcribeButton.isEnabled = true

}

}

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0, bufferSize: 1024, format: recordingFormat) { (buffer: AVAudioPCMBuffer, when: AVAudioTime) in

self.speechRecognitionRequest?.append(buffer)

}

audioEngine.prepare()

try audioEngine.start()

}

.

.

.

Learn SwiftUI and take your iOS Development to the Next Level |

The first tasks to be performed within the startSession method are to check if a previous recognition task is running and, if so, cancel it. The method also needs to configure an audio recording session and assign an SFSpeechAudioBufferRecognitionRequest object to the speechRecognitionRequest variable declared previously. A test is then performed to make sure that an SFSpeechAudioBufferRecognitionRequest object was successfully created. In the event that the creation failed, an exception is thrown:

if let recognitionTask = speechRecognitionTask {

recognitionTask.cancel()

self.speechRecognitionTask = nil

}

let audioSession = AVAudioSession.sharedInstance()

try audioSession.setCategory(AVAudioSessionCategoryRecord)

speechRecognitionRequest = SFSpeechAudioBufferRecognitionRequest()

guard let recognitionRequest = speechRecognitionRequest else { fatalError("SFSpeechAudioBufferRecognitionRequest object creation failed") }

Learn SwiftUI and take your iOS Development to the Next Level |

guard let inputNode = audioEngine.inputNode else { fatalError("Audio engine has no input node") }

recognitionRequest.shouldReportPartialResults = true

Next, the recognition task is initialized:

speechRecognitionTask = speechRecognizer.recognitionTask(with: recognitionRequest) { result, error in

var finished = false

if let result = result {

self.myTextView.text = result.bestTranscription.formattedString

finished = result.isFinal

}

if error != nil || finished {

self.audioEngine.stop()

inputNode.removeTap(onBus: 0)

self.speechRecognitionRequest = nil

self.speechRecognitionTask = nil

self.transcribeButton.isEnabled = true

}

}

Learn SwiftUI and take your iOS Development to the Next Level |

Having configured the recognition task, all that remains in this phase of the process is to install a tap on the input node of the audio engine, then start the engine running:

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0, bufferSize: 1024, format: recordingFormat) { (buffer: AVAudioPCMBuffer, when: AVAudioTime) in

self.speechRecognitionRequest?.append(buffer)

}

audioEngine.prepare()

try audioEngine.start()

Note that the installTap method of the inputNode object also uses a closure as a completion handler. Each time it is called, the code for this handler simply appends the latest audio buffer to the speechRecognitionRequest object where it will be transcribed and passed to the completion handler for the speech recognition task where it will be displayed on the Text View.

Learn SwiftUI and take your iOS Development to the Next Level |

Implementing the stopTranscribing Method

With the exception of the stopTranscribing method, the app is almost ready to be tested. Within the ViewController.swift file, locate and modify this method to stop the audio engine and configure the status of the buttons ready for the next session:

@IBAction func stopTranscribing(_ sender: AnyObject) {

if audioEngine.isRunning {

audioEngine.stop()

speechRecognitionRequest?.endAudio()

transcribeButton.isEnabled = true

stopButton.isEnabled = false

}

}

Testing the App

Compile and run the app on a physical iOS device, grant access to the microphone and permission to use speech recognition and tap the Start Transcribing button. Speak into the device and watch as the audio is transcribed into the Text View. Tap the Stop Transcribing button at any time to end the session.

Summary

Live speech recognition is provided by the iOS Speech framework and allows speech to be transcribed into text as it is being recorded. This process taps into an AVAudioEngine input node to stream the audio into a buffer and appropriately configured SFSpeechRecognizer, SFSpeechAudioBufferRecognitionRequest and SFSpeechRecognitionTask objects to perform the recognition. This chapter worked through the creation of an example application designed to demonstrate how these various components work together to implement near real-time speech recognition.

Learn SwiftUI and take your iOS Development to the Next Level |

| Previous | Table of Contents | Next |

| Playing Audio on iOS 10 using AVAudioPlayer | An Introduction to SiriKit |